At one point during the conference, the AI model was asked to read facial expressions and assess the mood.

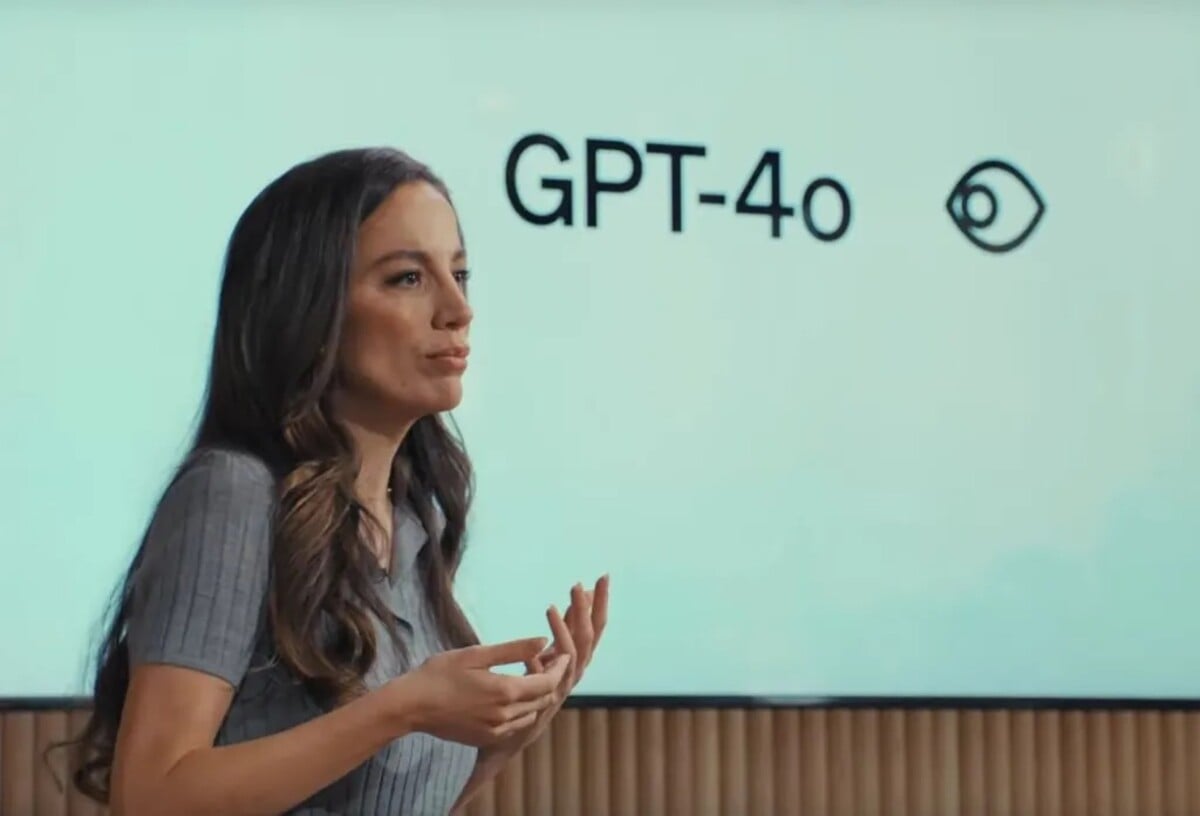

OpenAI has introduced a new update to its AI chat tool known as ChatGPT. The newly enhanced module is named GPT-4o, representing an improvement on the existing GPT-4.

The name includes the letter “o,” standing for the Latin word “omni” (“everyone,” “everything”). “We are introducing GPT-4o, our new flagship model that can think in real-time through sound, image, and text,” reads the announcement on the website.

Previously, the software was labeled a “groundbreaking milestone in technology,” aimed at enhancing processes and deep learning capabilities of artificial intelligence. According to The Guardian, among the updates revealed by OpenAI on Monday, May 13th, are improved quality and speed of ChatGPT’s international language capabilities.

The tool’s ability to upload images, audio, and text documents for analysis has also been enhanced. The company stated that these features would be gradually rolled out to ensure safe usage.

The new GPT-4o can even read emotions. At one point during the conference, the AI model was asked to interpret a facial expression and assess the emotions it conveyed. The voice assistant ChatGPT evaluated that the person appeared happy and cheerful with a big smile. “Whatever is happening, it looks like you’re in a great mood. Would you like to share your emotions?” ChatGPT said in an energetic female voice.

The predecessor, GPT-4, was already capable of passing the bar exam with a score achieved by only the top ten percent of test-takers. GPT-4o is expected to assist in analyzing charts as well.

The speed of the new chatbot is also remarkable, with real-time functionality. CEO Mira Murati conversed with her colleague in Italian, and the AI model translated it into English and vice versa. In the future, the goal is to gather information from live broadcasts, enabling the chat to explain game rules, for example.